LLMs Are Powerful — But They Strain Real Operations

Most teams don’t question whether LLMs work. They struggle with what happens after the demo:

Rising Costs

Token, compute, and latency blow up when usage scales — making LLM programs hard to justify.

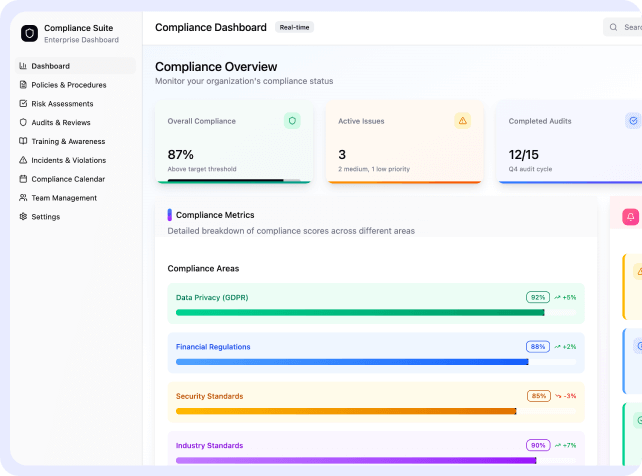

Compliance Friction

Data leaving your perimeter triggers approvals, audits, and slowdowns that stall deployment.

Unreliable Outputs

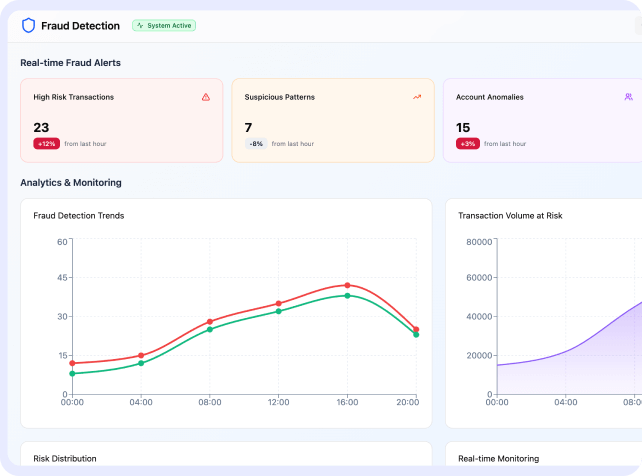

Inconsistency and hallucinations block LLMs from being trusted inside regulated or high-stakes operations.

Domain Mismatch

Generic models do not understand industry language and require heavy tuning before they become reliable.

Do you want AI that protects your data, follows your rules, and delivers measurable business outcomes — while reducing AI spend and increasing operational accuracy?

AI That Fits Your Workflow

We design and build domain-specific AI systems using private SLMs and intelligent agents that are:

Domain-Precise

Intelligence

Models trained on your documents, schemas, rules, and vocabulary — not broad internet data.

Operationally Stable

Outputs

Deterministic, bounded responses designed for repeatable decisions, not creative generation.

Predictable Cost

at Scale

SLMs reduce inference and infra overhead — typically delivering equivalent outcomes at a fraction of LLM cost.

Private & Auditable

Runs on-prem or private cloud with full traceability, logging, and explainability for every decision.

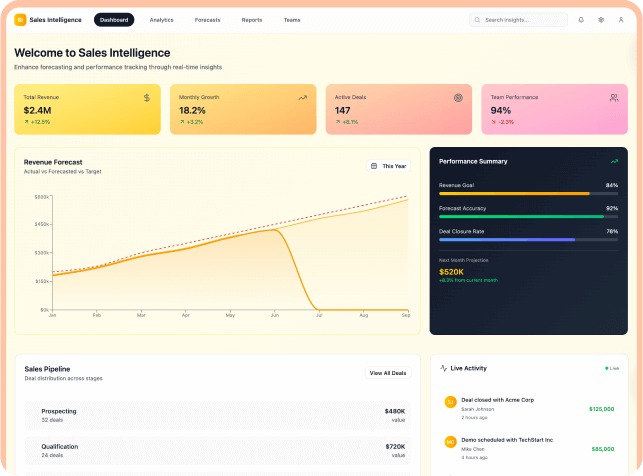

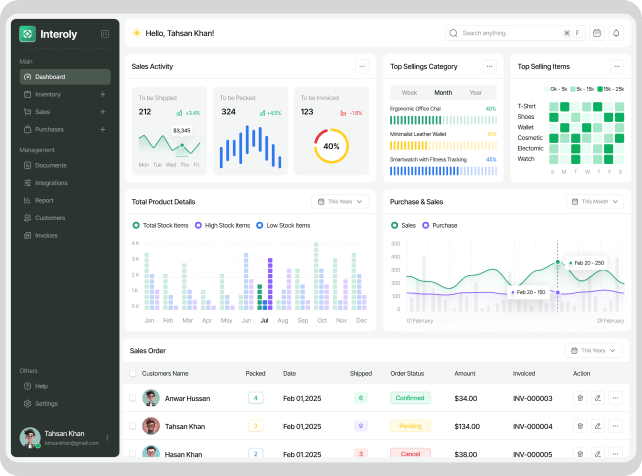

Where Small Models Create Big Impact

Pick a workflow — we’ll show ROI fast.

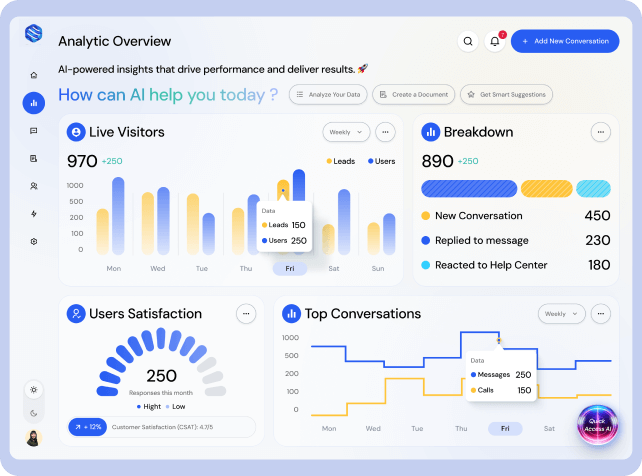

Email & Document Intelligence

Automate extraction, classification, routing, and summarization for regulated or high-volume communication.

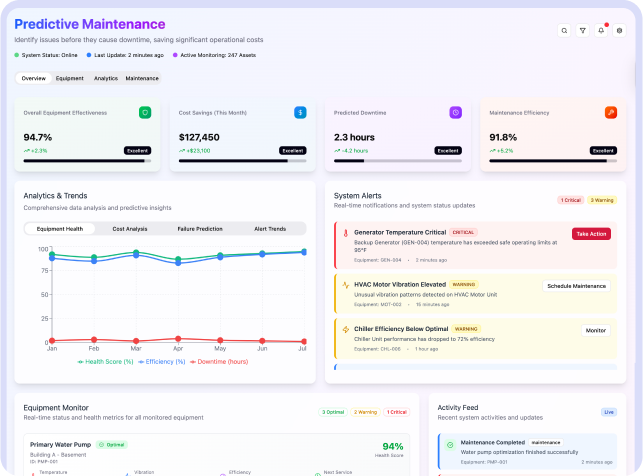

Operations Decision Automation

Reduce manual reviews in claims, underwriting, compliance checks, approvals, and exception handling.

Domain-Aware Copilots for Teams

Reliable internal assistants that use your policies and knowledge — not internet-trained guesses.

Search, Matching & Classification

Transform messy domain data into structured decisions across tickets, contracts, profiles, records, and cases.

Smaller Models Are a Better Fit

LLMs are great for broad, open-ended tasks. Enterprise AI needs something different: precision, predictability, control, and cost stability.

Because SLMs are trained narrowly for your workflows, you get:

Higher real-world accuracy

Near-zero hallucination risk

Lower latency and infrastructure load

Deployment inside your compliance perimeter

Better total cost of ownership as usage grows

Built for Real Enterprise Constraints

No forced public APIs. No unmanaged data exposure. GalaxyEdge designs and delivers AI deployments that align with your governance requirements.

On-Premise Deployment

Everything — models, inference, data — runs inside your network.

Private Cloud Deployment

Dedicated VPC with controlled access, predictable costs, and seamless integration.

Hybrid Architecture

Split workloads intelligently for performance, compliance, and scale.

Data-Residency Alignment

Processing and storage stay within approved regions to satisfy regulatory mandates (GDPR, HIPAA, SOC2, etc.).

Frequently

Asked Questions

Large public models are powerful but hard to govern, costly at scale, and difficult to adapt to strict rules. We design private SLM systems that run inside your environment, are tuned to your workflows, and give you clear control over cost, residency, and risk.

For broad questions, big models help. For focused, repeatable, domain-specific work, a tuned SLM typically delivers higher accuracy and lower variability.

- Deploy only in approved regions

- Document data paths clearly

- Keep training and inference data out of shared pools

- Provide logs and storage that support access + deletion requests

With governable AI, you can always answer:

- Who used the system?

- What data was sent?

- What did the model return — and why?

GalaxyEdge builds these controls into architecture, logs, and policies.

A focused pilot or proof of concept can produce measurable results within weeks. From there, scale based on validated accuracy, cost, and workflow fit.